Authors:

(1) Omri Avrahami, Google Research and The Hebrew University of Jerusalem;

(2) Amir Hertz, Google Research;

(3) Yael Vinker, Google Research and Tel Aviv University;

(4) Moab Arar, Google Research and Tel Aviv University;

(5) Shlomi Fruchter, Google Research;

(6) Ohad Fried, Reichman University;

(7) Daniel Cohen-Or, Google Research and Tel Aviv University;

(8) Dani Lischinski, Google Research and The Hebrew University of Jerusalem.

Table of Links

- Abstract and Introduction

- Related Work

- Method

- Experiments

- Limitations and Conclusions

- A. Additional Experiments

- B. Implementation Details

- C. Societal Impact

- References

Abstract

Recent advances in text-to-image generation models have unlocked vast potential for visual creativity. However, these models struggle with generation of consistent characters, a crucial aspect for numerous real-world applications such as story visualization, game development asset design, advertising, and more. Current methods typically rely on multiple pre-existing images of the target character or involve labor-intensive manual processes. In this work, we propose a fully automated solution for consistent character generation, with the sole input being a text prompt. We introduce an iterative procedure that, at each stage, identifies a coherent set of images sharing a similar identity and extracts a more consistent identity from this set. Our quantitative analysis demonstrates that our method strikes a better balance between prompt alignment and identity consistency compared to the baseline methods, and these findings are reinforced by a user study. To conclude, we showcase several practical applications of our approach.

1. Introduction

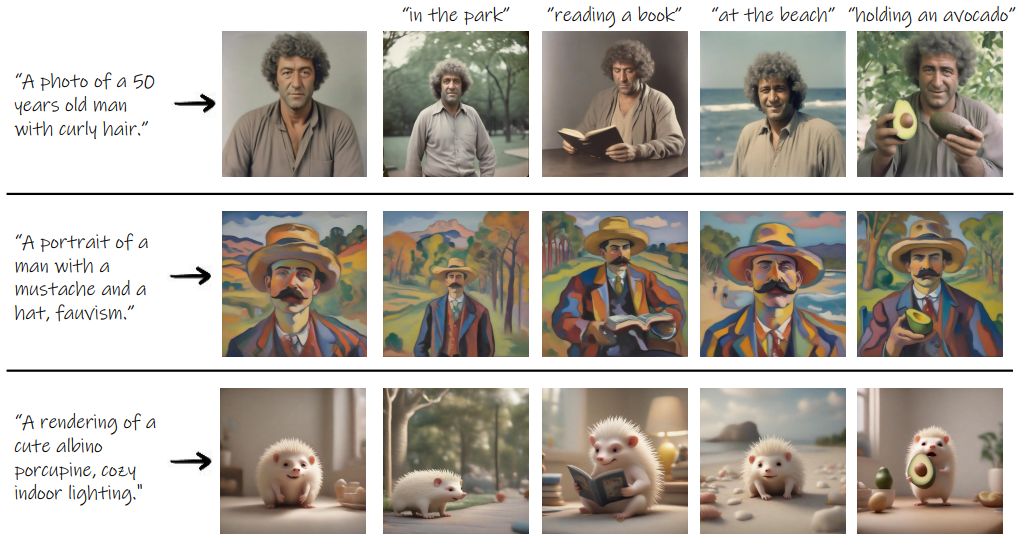

The ability to maintain consistency of generated visual content across various contexts, as shown in Figure 1, plays a central role in numerous creative endeavors. These include illustrating a book, crafting a brand, creating comics, developing presentations, designing webpages, and more. Such consistency serves as the foundation for establishing

brand identity, facilitating storytelling, enhancing communication, and nurturing emotional engagement.

Despite the increasingly impressive abilities of text-to-image generative models, these models struggle with such consistent generation, a shortcoming that we aim to rectify in this work. Specifically, we introduce the task of consistent character generation, where given an input text prompt describing a character, we derive a representation that enables generating consistent depictions of the same character in novel contexts. Although we refer to characters throughout this paper, our work is in fact applicable to visual subjects in general.

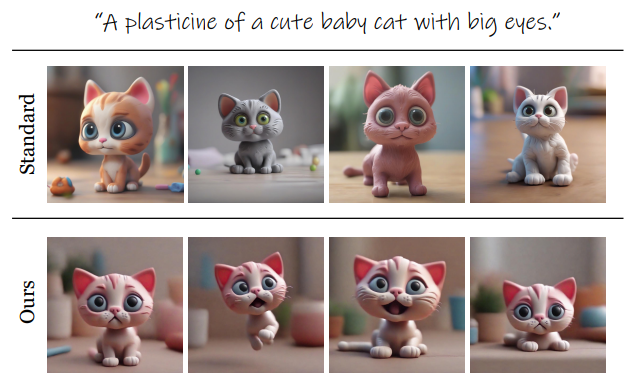

Consider, for example, an illustrator working on a Plasticine cat character. As demonstrated in Figure 2, providing a state-of-the-art text-to-image model with a prompt describing the character, results in a variety of outcomes, which may lack consistency (top row). In contrast, in this work we show how to distill a consistent representation of the cat (2nd row), which can then be used to depict the same character in a multitude of different contexts.

The widespread popularity of text-to-image generative models [57, 63, 69, 72], combined with the need for consistent character generation, has already spawned a variety of ad hoc solutions. These include, for example, using celebrity names in prompts [64] for creating consistent humans, or using image variations [63] and filtering them manually by similarity [65]. In contrast to these ad hoc, manually intensive solutions, we propose a fully-automatic principled approach to consistent character generation.

The academic works most closely related to our setting are ones dealing with personalization [20, 70] and story generation [24, 36, 62]. Some of these methods derive a representation for a given character from several user-provided images [20, 24, 70]. Others cannot generalize to novel characters that are not in the training data [62], or rely on textual inversion of an existing depiction of a human face [36].

In this work, we argue that in many applications the goal is to generate some consistent character, rather than visually matching a specific appearance. Thus, we address a new setting, where we aim to automatically distill a consistent representation of a character that is only required to comply with a single natural language description. Our method does not require any images of the target character as input; thus, it enables creating a novel consistent character that does not necessarily resemble any existing visual depiction.

Our fully-automated solution to the task of consistent character generation is based on the assumption that a sufficiently large set of generated images, for a certain prompt, will contain groups of images with shared characteristics. Given such a cluster, one can extract a representation that captures the “common ground” among its images. Repeating the process with this representation, we can increase the consistency among the generated images, while still remaining faithful to the original input prompt.

We start by generating a gallery of images based on the provided text prompt, and embed them in a Euclidean space using a pre-trained feature extractor. Next, we cluster these embeddings, and choose the most cohesive cluster to serve as the input for a personalization method that attempts to extract a consistent identity. We then use the resulting model to generate the next gallery of images, which should exhibit more consistency, while still depicting the input prompt. This process is repeated iteratively until convergence.

We evaluate our method quantitatively and qualitatively against several baselines, as well as conducting a user study. Finally, we present several applications of our method.

In summary, our contributions are: (1) we formalize the task of consistent character generation, (2) propose a novel solution to this task, and (3) we evaluate our method quantitatively and qualitatively, in addition to a user study, to demonstrate its effectiveness.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.

Omri, Yael, Moab, Daniel and Dani performed this work while working at Google.